no, no it isn't, and again: you are wrong

also people who only have 1 screen are casual. Not to mention I know several single boxers who I promise are more hardcore than most multiboxers.

But let us face facts: you are clueless and are apparently doubling down on it instead of admitting you are wrong and then flailing and attempting to insult everyone. It'd be hilarious if it weren't so sad.

Anyone Ever Tried XI On An Sbc?

Anyone ever tried XI on an sbc?

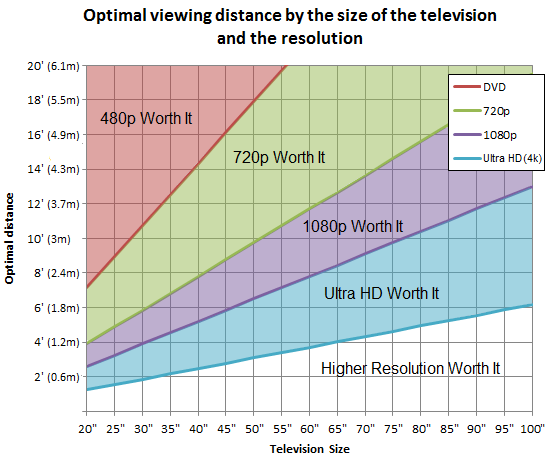

First off: trying to compare screen resolutions is pointless as its only one of three variables the information for whether it matters or not. You also need to include Viewing distance and the screen size. There's plenty of handy charts out there that show the "breakpoints" for when a change in resolution is or is not perceptible to the human eye with 20/20 vision at specific distances on specific sizes.

Here's an example:

So lets say you are using a 50" screen, and are viewing from <2' away (so a moderately large screen being used pretty close as a desktop setup for PC gaming), as you can see on the chart, you actually can still perceive the difference even up well past Ultra HD, in fact IIRC, when closer than 2' on large screens you can still easily tell the difference between 4k and 8k resolution, but around 8kish is when you start peaking.

And at extremely close but small screen distances, you go even way up and beyond that, its theorized way above a benchmark of a theoretical "16K" would still be needed. This case specifically would be for VR headsets, which have already started surpassing 8K and still are well below necessary benchmarks.

Or on the other end of the spectrum, a massive several hundred foot screen, like in a movie theatre, but from only like 20~30 feet away (So first row seats), you'll still as well be able to perceive the difference between 4K and 8K.

However, as you get farther from the screen, it blurs and you start to have trouble distinguishing between the two, so if you're sitting in the back row of a movie theatre, you probably wouldn't be able to perceive a difference.

As for frame rates, the human eye does not perceive light in the form of a "frame rate", and the human eye can perceive the difference even as high as 120 fps in images, but how perceptible it is to the human eye depends on a myriad of things, like what color the light is, how bright it is, etc. You have a much easier time perceiving it on bright colors. Its why the common cited example is moving the mouse on a white background, that black pattern on a white background is extremely perceptible to the human eye and yeah, you can even tell the difference in a case like that well above 120fps.

And as others mentioned, whether its periphery or focal matters a tonne too. Also other random ***like how tired you are, how strained your eyes are, how good your eyesight is, whether you are paying attention and looking for it, etc etc.

But the human eye doesnt see "frames" of data, it constantly moves around your view and takes multiple snapshots of information, then your brain pieces that data together to create a conceptual idea of what you are even seeing. Half of what you think you even are seeing is not actually there, it's "guesswork" your brain used to fill in what it thinks outta be there.

Its why stuff like optical illusions exist, like if you realized just how much of what you think you see is actually just your brain filling the data in by guessing, you'd be surprised.

For example: If you had a white screen you were staring at, and for 1/120th of a second it flickered to all black then back to white, you absolutely without a doubt would notice that.

But, if you have a screen flickering back and forth between "white" and "Ever so slightly off white" 120 times per second, you would likely not perceive that at all.

And then you get into REALLY crazy stuff, like pupil dilation responses, and the fact your brain also automatically performs color correction over time (which is what causes those afterimages you see when you stare at a light for awhile), and even locomotion correction (which is what causes that "waterfall" effect you experience if you watch the credits of a movie for a long while, then look away)

There's so many different mechanisms all working together in unison when it comes to our eyesight, trying to simplify it down to "can or can not humans perceive fps" is just not possible.

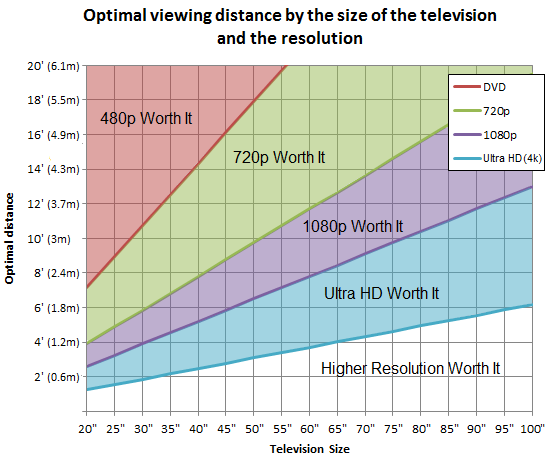

Here's an example:

So lets say you are using a 50" screen, and are viewing from <2' away (so a moderately large screen being used pretty close as a desktop setup for PC gaming), as you can see on the chart, you actually can still perceive the difference even up well past Ultra HD, in fact IIRC, when closer than 2' on large screens you can still easily tell the difference between 4k and 8k resolution, but around 8kish is when you start peaking.

And at extremely close but small screen distances, you go even way up and beyond that, its theorized way above a benchmark of a theoretical "16K" would still be needed. This case specifically would be for VR headsets, which have already started surpassing 8K and still are well below necessary benchmarks.

Or on the other end of the spectrum, a massive several hundred foot screen, like in a movie theatre, but from only like 20~30 feet away (So first row seats), you'll still as well be able to perceive the difference between 4K and 8K.

However, as you get farther from the screen, it blurs and you start to have trouble distinguishing between the two, so if you're sitting in the back row of a movie theatre, you probably wouldn't be able to perceive a difference.

As for frame rates, the human eye does not perceive light in the form of a "frame rate", and the human eye can perceive the difference even as high as 120 fps in images, but how perceptible it is to the human eye depends on a myriad of things, like what color the light is, how bright it is, etc. You have a much easier time perceiving it on bright colors. Its why the common cited example is moving the mouse on a white background, that black pattern on a white background is extremely perceptible to the human eye and yeah, you can even tell the difference in a case like that well above 120fps.

And as others mentioned, whether its periphery or focal matters a tonne too. Also other random ***like how tired you are, how strained your eyes are, how good your eyesight is, whether you are paying attention and looking for it, etc etc.

But the human eye doesnt see "frames" of data, it constantly moves around your view and takes multiple snapshots of information, then your brain pieces that data together to create a conceptual idea of what you are even seeing. Half of what you think you even are seeing is not actually there, it's "guesswork" your brain used to fill in what it thinks outta be there.

Its why stuff like optical illusions exist, like if you realized just how much of what you think you see is actually just your brain filling the data in by guessing, you'd be surprised.

For example: If you had a white screen you were staring at, and for 1/120th of a second it flickered to all black then back to white, you absolutely without a doubt would notice that.

But, if you have a screen flickering back and forth between "white" and "Ever so slightly off white" 120 times per second, you would likely not perceive that at all.

And then you get into REALLY crazy stuff, like pupil dilation responses, and the fact your brain also automatically performs color correction over time (which is what causes those afterimages you see when you stare at a light for awhile), and even locomotion correction (which is what causes that "waterfall" effect you experience if you watch the credits of a movie for a long while, then look away)

There's so many different mechanisms all working together in unison when it comes to our eyesight, trying to simplify it down to "can or can not humans perceive fps" is just not possible.

Asura.Memes said: »

Bahamut.Balduran said: »

Asura.Memes said: »

Jetackuu said: »

and? there's a yuge difference between 720p at 20fps and 1080p. I also wouldn't make that bet, but hey.

Plus science shows humans can't really see the difference beyond 20fpz. Enjoy pretending you can see 240hz.

I don't really get your logic. What does playing fullscreen have anything to do with a player being casual or not? What exactly is casual in your concept?

That is an ignorant interpretation of single box players. Playing a single character doesn't reflect a casual image, you can still be a veteran or hardcore player regardless of number of characters played, and there is no evidence to point otherwise.

Asura.Memes said: »

Bahamut.Balduran said: »

You clearly have no idea what you are talking about, because the human eye can undoubtedly see a difference between 720p and 1080p

Refer to your post in the below quote. You certainly implied that anything above 720p requires 'superman' vision, therefore evidently you did.

Asura.Memes said: »

Asura.Memes said: »

Anyone still playing FFXI has spent the last 10-15 years of their life holed up playing this ***excessive amounts and getting no vitamin D. None of you have perfect vision. Also I said need, not want. There are diminishing returns, of course. 20fps for a PS2 game is fine!

This proves nothing more than a hypothetical opinion, and as previously pointed, there is indeed a variance in quality between 720p and 1080p, and the latter offers a better experience in visuals.

Asura.Memes said: »

Jetackuu said: »

no, no it isn't, and again: you are wrong

also people who only have 1 screen are casual. Not to mention I know several single boxers who I promise are more hardcore than most multiboxers.

But let us face facts: you are clueless and are apparently doubling down on it instead of admitting you are wrong and then flailing and attempting to insult everyone. It'd be hilarious if it weren't so sad.

also people who only have 1 screen are casual. Not to mention I know several single boxers who I promise are more hardcore than most multiboxers.

But let us face facts: you are clueless and are apparently doubling down on it instead of admitting you are wrong and then flailing and attempting to insult everyone. It'd be hilarious if it weren't so sad.

This thread needs less of your failed attempts to insult people and more discussion on the facts of FPS

Na, what is sad is you and this thread needs less of it, as do I. Bye, Felicia.

Asura.Memes said: »

People take themselves way too seriously here. I was referring to the fps when talking about superman vision. Resolution is pretty irrelevant to the discussion.

If you can't play ffxi in 720p 20fps I wonder what hundreds of thousands of us did for many years huh

If you can't play ffxi in 720p 20fps I wonder what hundreds of thousands of us did for many years huh

See my statements above. "Superman vision" is not the bar for perceiving FPS differences all the way up to like 240fps+, but higher FPS is not necessarily always a good thing on the flipside.

For example I actually prefer playing FFXI at 30 FPS over 60, and purposefully have the cap set to 60. Visually it just looks "wrong" to me at 60, because I've been conditioned for over 15 years on 20~30 FPS ffxi, my muscle memory now is built on that lower framerate. If I bump up to 60 FPS I start misclicking menus as I navigate and ***, even *** up simple ***like my gil bids on the auction house (though catching myself on it before submitting)

Basically, when I bump up to 60fps, I find I make a /lot/ more misclicks. The combination of menu sounds and muscle memory are all together pretty important for the random little muscle memory ***, like shoving items rapidly into trade boxes, moving items in inventory, macros, etc etc.

I played at 60fps for about a week and just basically concluded unlearning 15 years of muscle memory is just not gonna be a thing, and went back to 30.

soralin said: »

For example I actually prefer playing FFXI at 30 FPS over 60, and purposefully have the cap set to 60. Visually it just looks "wrong" to me at 60, because I've been conditioned for over 15 years on 20~30 FPS ffxi, my muscle memory now is built on that lower framerate. If I bump up to 60 FPS I start misclicking menus as I navigate and ***, even *** up simple ***like my gil bids on the auction house (though catching myself on it before submitting)

Basically, when I bump up to 60fps, I find I make a /lot/ more misclicks. The combination of menu sounds and muscle memory are all together pretty important for the random little muscle memory ***, like shoving items rapidly into trade boxes, moving items in inventory, macros, etc etc.

I played at 60fps for about a week and just basically concluded unlearning 15 years of muscle memory is just not gonna be a thing, and went back to 30.

Basically, when I bump up to 60fps, I find I make a /lot/ more misclicks. The combination of menu sounds and muscle memory are all together pretty important for the random little muscle memory ***, like shoving items rapidly into trade boxes, moving items in inventory, macros, etc etc.

I played at 60fps for about a week and just basically concluded unlearning 15 years of muscle memory is just not gonna be a thing, and went back to 30.

Agreed. Furthermore, a common mistake many players do, is go into high population events like 18-man divergence with 60 FPS, and experience horrendous interface lag, because no matter what you do, the game will never ever render smoothly at 60 FPS in those situations. Reverting to 30 FPS will greatly improve the performance, and make the game much more playable with tremendously less interface lag.

what loser plays at 20fps? the game should consistently run at 29.7

There's also a big difference in using say 1024x768 on a 15" crt and using it on a 24-40" lcd panel. It is worth noting that the game isn't even tested at high resolutions. That all being said, the original claim was dumb. Personally I can't see how people can play with multiple windows on one screen, I guess if they're botting it's fine, but otherwise it'd drive me bonkers.

There's also a big difference in using say 1024x768 on a 15" crt and using it on a 24-40" lcd panel. It is worth noting that the game isn't even tested at high resolutions. That all being said, the original claim was dumb. Personally I can't see how people can play with multiple windows on one screen, I guess if they're botting it's fine, but otherwise it'd drive me bonkers.

Even 10x10 resolution will work. lol

Please admit though your 1-box casual player theory was a pretty dumb assumption, so we can put this to rest... =P

Please admit though your 1-box casual player theory was a pretty dumb assumption, so we can put this to rest... =P

Asura.Memes said: »

It was tongue in cheek, but 99.9% of non casuals multibox so I don't get why you're being so pissy about it

So you are being ironic, and at the same time, you continue to try to prove further rubbish information. Quit contradicting yourself and throwing out random statistics out of nowhere.

Bahamut.Balduran said: »

Asura.Memes said: »

It was tongue in cheek, but 99.9% of non casuals multibox so I don't get why you're being so pissy about it

So you are being ironic, and at the same time, you continue to try to prove further rubbish information. Quit contradicting yourself and throwing out random statistics out of nowhere.

The flailing is sad.

This is the stupidest slapfight I've seen on this site, and that's saying something.

I've topicbanned both of them.

Getting back on topic, this thread is the #1 on the FFXI subreddit atm and it caught my eye. Obviously not a SBC but I feel like its capturing the spirit of it, OP might find it relevant to their interests.

https://www.reddit.com/r/ffxi/comments/itc2hc/as_an_og_player_i_never_thought_i_would_be_able/

That takes me back to the ye old Logitech Keyboard Controller

Honestly, I wonder how hard it would be to convert a Playstation 2 controller port over to a USB device input, this controller is without a doubt the best FFXI experience I've ever had, it was bulky but sits really nice in your lap when sitting on a couch, its the only way I ever was able to lounge and play FFXI, anything else like normal keyboards Ive found too bulky, but I also have found keyboard+game controller doesnt work well at all (having to set down one to pickup the other suuuuucks)

Nothing Ive seen has managed to capture this logitech controller's comfort level.

If anyone knows of a way to convert PS2 dualshock output => PC USB input, lemme know!

Edit: Oh ***apparently adapters exist!

Damn this is honestly sound more and more appealing by the minute, I might genuinely consider this...

https://www.reddit.com/r/ffxi/comments/itc2hc/as_an_og_player_i_never_thought_i_would_be_able/

That takes me back to the ye old Logitech Keyboard Controller

Honestly, I wonder how hard it would be to convert a Playstation 2 controller port over to a USB device input, this controller is without a doubt the best FFXI experience I've ever had, it was bulky but sits really nice in your lap when sitting on a couch, its the only way I ever was able to lounge and play FFXI, anything else like normal keyboards Ive found too bulky, but I also have found keyboard+game controller doesnt work well at all (having to set down one to pickup the other suuuuucks)

Nothing Ive seen has managed to capture this logitech controller's comfort level.

If anyone knows of a way to convert PS2 dualshock output => PC USB input, lemme know!

Edit: Oh ***apparently adapters exist!

Damn this is honestly sound more and more appealing by the minute, I might genuinely consider this...

I learned from some old threads on the forums that people were able to get FFXI running on some pretty barebones Windows tablets. Some with only 2GB of memory and 1.33 ghz processors. With that in mind, it looks like the requirements are pretty easy to meet. I've purchased an AtomiPi dev board in order to test this theory.

Basically the plan is this, use a very barebones version of Windows 10, install drivers, FFXI and Windower (it ain't worth playing without).

I will check the performance at various resolutions (no arguing about resolutions plz).

**Update** the AtomicPi is larger than some off the shelf mini pcs, it is about the size of a mitx motherboard. After setting it up I can confirm the game runs like garbage. You could use it as a low wattage way to have a character mule 24/7 or something I guess.

To answer the question posed. Yes, if you stretch the definition of a SBC you can get FFXI running on it. No, you can't on a raspberry pi/ odrioid. And there is just about no reason to do so.

Basically the plan is this, use a very barebones version of Windows 10, install drivers, FFXI and Windower (it ain't worth playing without).

I will check the performance at various resolutions (no arguing about resolutions plz).

**Update** the AtomicPi is larger than some off the shelf mini pcs, it is about the size of a mitx motherboard. After setting it up I can confirm the game runs like garbage. You could use it as a low wattage way to have a character mule 24/7 or something I guess.

To answer the question posed. Yes, if you stretch the definition of a SBC you can get FFXI running on it. No, you can't on a raspberry pi/ odrioid. And there is just about no reason to do so.

as someone who came in on the ps2, those keyboards are flimsy imo. i had one and it broke for no reason.

All FFXI content and images © 2002-2025 SQUARE ENIX CO., LTD. FINAL

FANTASY is a registered trademark of Square Enix Co., Ltd.